Marvell Technology recently announced that its Structera CXL platform is interoperable with AMD's EPYC CPUs and Intel's 5th Gen Xeon scalable platforms, marking a significant compute express link (CXL) memory performance feat.

Cloud data centers often must deploy servers from multiple processing unit vendors. While such mix-and-match deployments of both AMD and Intel CPU-based systems allow faster data center scaling, they can also reduce performance due to challenges with high-speed memory interoperability.

AMD EPYC CPU.

Marvell’s announcement shows that AMD EPYC and 5th Gen Intel Xeon CPUs can work together within a single platform and benefit from the speed and functionality gains of CXL memory acceleration.

What Is Compute Express Link (CXL)?

CXL is an open standard protocol that enables coherent memory sharing between different chips or devices. It comes in three primary functional groups: CXL.io, which governs sharing of PCIe 5.0 (moving to 6.0), CXL.cache, which allows chips to access each other’s cache, and CXL.mem, which gives a host CPU load/store access to device-attached memory. CXL essentially allows devices to use memory from different sources as though it is integrated.

Intel 5th Generation Xeon.

Memory management has been an important part of computing systems since the advent of modern operating systems decades ago. With higher speeds and more complex data center scaling, memory management has become significantly more important and complex. CXL was developed as an open standard to improve memory and processor connections and ease the development burden for high-speed, high-capacity systems. It allows devices to share memory for better co-processing and more complete utilization of memory across devices.

While standard communications protocols offer interoperability, differences in timing, latency, and bandwidth between manufacturers, even when compatible with the same standards, often prevent operation at maximum theoretical performance levels. CXL operates like an abstraction or translation layer that maintains coherence while improving performance and bandwidth.

Prior to this announcement, CXL memory interoperability between AMD EPYC CPU and 5th Gen Intel Xeon had not been proven at the CPU level. Now, with interoperable Marvell Structera CXL products, high-load server farms, such as those catering to artificial intelligence (AI) and machine learning (ML), will be able to boost performance per server and performance per square foot.

Highlights of the Structera CXL Portfolio

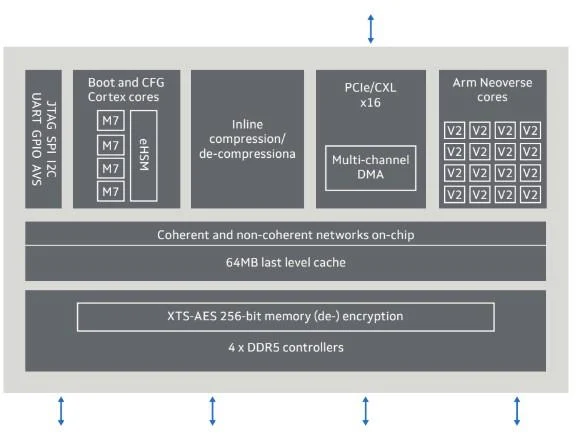

Marvell’s Structera CXL portfolio contains products designed to improve data center memory performance. The chips support inline compression for higher DRAM capacity, DDR4 support to operate legacy memory, DDR5 support for 200 GB/sec memory bandwidth, and four-channel support for greater bandwidth and capacity. Two products stand out in the Structera CXL portfolio: the Structera A CXL near-memory accelerators and the Structera X CXL memory-expansion controllers.

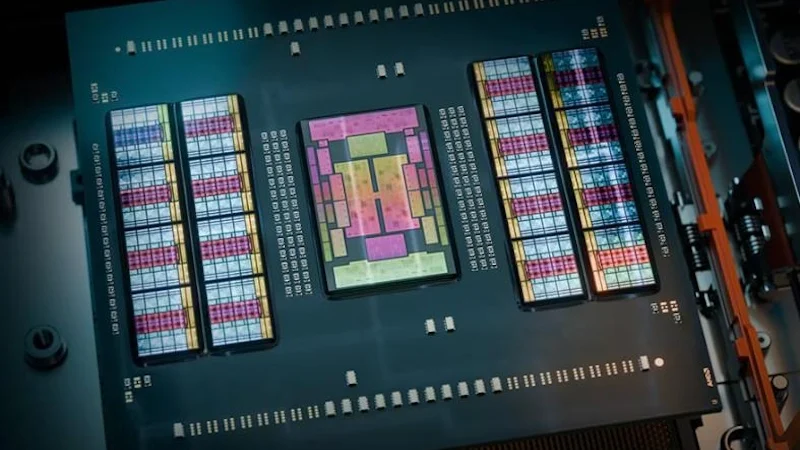

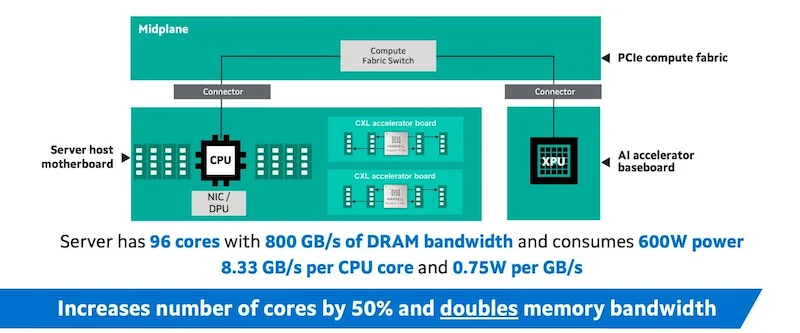

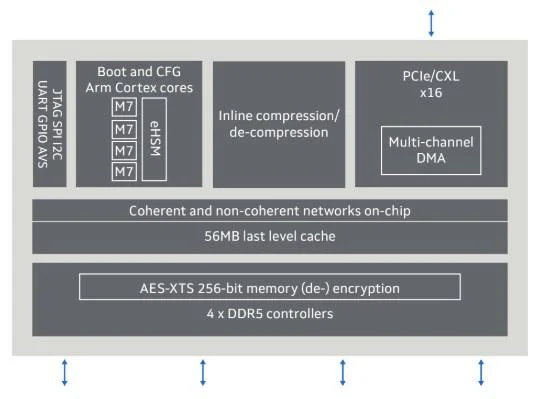

Structera server accelerator architecture.

The Structera A near-memory accelerators feature server-class Arm Neoverse V2 cores, DDR5 support, and up to 1.6 Tbps of memory bandwidth. Marvell optimized the devices particularly for high-bandwidth workloads such as deep learning recommendation models (DLRM). Structera A can also offload memory-intensive processes such as AI, ML, or in-memory databases to its server-class Arm cores.

Block diagram of the Structera A 2504 CXL controller.

The Structera X memory expansion controllers support DDR4 and DDR5 DIMMs, enabling the deployment of terabytes of memory capacity while optimizing both cost efficiency and power consumption.

Structera X block diagram.

By opening up high-speed coherent memory sharing, cloud service providers and server OEMs can dramatically increase memory capacity and bandwidth. Sharing resources effectively increases usable memory over and above physical memory upgrades due to better utilization of all memory components.

Interoperability Between Leading Data Center CPUs

Intel's 5th Gen Xeon and AMD's EPYC CPUs stand together as the leading multi-purpose data center CPUs. Both processor lines include on-chip, AI-specific capability and can be used as stand-alone servers or as host CPUs for GPU-centric servers. These CPUs are utilized in the most demanding application settings. As data centers expand, one or the other may be the appropriate choice. However, subtle differences in timing, speed, and capability make interoperability more challenging.

By demonstrating CXL interoperability between the industry’s two flagship CPU lines, Marvell makes data center scaling easier. Regardless of the CPU family, the use of Structera will allow data center build-out while maintaining memory coherence, stability, and scalability for high-performance memory expansion. Data center operators can choose CPU architecture based on the specific strengths of each platform and maintain consistent performance.

English

English